OLED Displays and the Immersive Experience

OLED Displays and the Immersive Experience

OLED Displays and the Immersive Experience

After generating much initial excitement over the past several years, AR/VR technology deployment has hit some recent roadblocks. New applications, facilitated by OLED technology, could jump-start this technology yet again.

by Barry Young

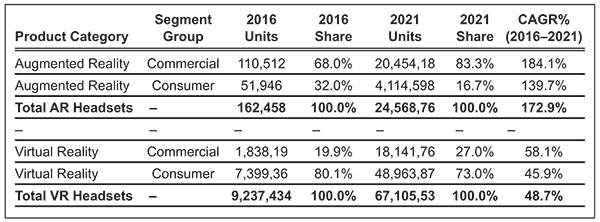

IN 2014, Facebook founder Mark Zuckerberg plunked down a reported $US 3 billion for virtual-reality (VR) system maker Oculus and said, “We believe this kind of immersive, augmented reality will become a part of daily life for billions of people.”1 IDC, a leading technology market research firm, was so taken by the promise of a new paradigm for consumer and commercial applications that it later forecasted shipments would grow from 9.2 million units in 2016 to 67.1 million units in 2021, as shown in Fig. 1.2

Fig. 1: Worldwide AR and VR headset shipments are forecasted here to increase dramatically from 2016 to 2021. Source: IDC

Three years later (2017), Zuckerberg testified (in a technology theft case brought against Oculus by a company called ZeniMax Media), that VR hadn’t taken off as quickly as he had anticipated and that VR sales “won’t be profitable for quite a while.”3 So VR isn’t as hot as everyone thought it would be, and the evidence is compelling. For most people, the cost is prohibitive: headsets start at $300 to $500, and go up from there if motion controllers, eye trackers, and other accessories are added. Plus, the headset is often tethered to a powerful computer, which costs $500 to $1,500 and limits the user’s mobility.

Sony’s PlayStation VR, designed to work with the tens of millions of installed PlayStation 4 consoles, was expected to set the industry on its ear, but early enthusiasm quickly dissipated for both the games and the content. Google’s well-publicized Glass never reached the masses and is now relegated to industrial applications.

Mixed Terminology

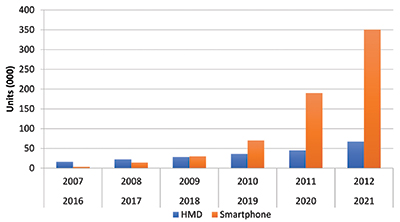

When comparing the build-up to the reality, it may be useful to consider that neither augmented reality (AR) nor VR has reached the growth levels experienced by smartphones in the early years of their growth, as shown in Fig. 2. In addition to the reasons listed above, the failure of this technology to take off is also due to the way that experts have confused consumers with terms like virtual, mixed, and augmented reality, or immersive computing, without clearly defining these terms. (In short, virtual reality immerses the user in a digital world; he cannot see anything but that world. Augmented reality lets the user see the real world, but overlays that physical view with digital content. For more about the differences between AR and VR, see the article “LCOS and AR/VR,” also in this issue).

Fig. 2: The VR/AR market from 2016 to 2021 has not even begun to keep pace with the smartphone market from 2007 to 2012. Source: Canaccord Genuity, Trendforce, OLED-A

So despite all the hype, we still don’t have the “next big thing,” although we seem to have pretty much defined it: a lightweight, always-on device that obliterates the divide between the real world and the one created by our computers.

“We know what we really want: AR glasses,” said Oculus’s chief scientist Michael Abrash at Facebook’s F8 developers’ conference in April 2017. “They aren’t here yet, but when they arrive they’re going to be the great transformational technologies of the next 50 years.” He predicted that in the near future, “instead of carrying stylish smartphones everywhere, we’ll be wearing stylish glasses.” And he added that “these glasses will offer AR, VR, and everything in between, and we’ll wear them all day and we’ll use them in every aspect of our lives.”

About AR/VR

Augmented reality/virtual reality (AR/VR) are also expected to re-energize 3D after the failures of the film and TV industries. While commonly used apps like Facebook, texting, and FaceTime are 2D, VR/AR is a 3D space phenomenon. Web design must change if instead of up/down and left/right scrolling, the actual web page takes up a 3D space. Instead of studying pictures of human anatomy, all parts of a human body – inside and out – would be available, including a more natural interface, as we physically operate in 3D. Some believe that AR/VR will not have “arrived” until we do mundane and boring things with it and some industries are already adapting:

• Certain medical studies have shown that VR can be more effective than morphine for pain management in burn units.4

• Following a year of VR therapy, paraplegics participating in a study at Duke University began to regain certain functions, such as bladder control.5

• GE is training workers in tasks such as jet engine repair using Glass (formerly Google Glass) and AR technology, as shown in Fig. 3.6

Fig. 3: A company called Upskill, which received backing from GE Ventures, has started working with Glass (formerly Google Glass) and GE Aviation to build an AR solution that connects a smart torque to perfect all of the steps in building a jet engine that require tightening nuts. Source: GE Reports

AR/VR Functionalities

AR/VR products require a range of functionalities that go far beyond the typical display on an advanced smartphone, including:

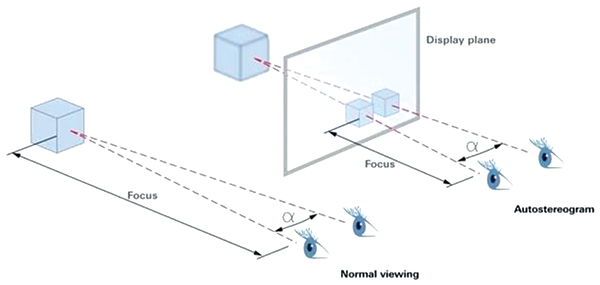

Stereoscopic Vision: Creates the perception of depth and 3D structures. To achieve this, the system has to generate separate images for each eye, one slightly offset from the other, to simulate parallax as shown in Fig. 4. The system should operate at a minimum frame rate of 60 frames per second, to avoid any perceived lag that might break the illusion or, worse, lead to the nausea that is often associated with poorly performing systems.

Fig. 4: Normal “naked-eye” viewing (at left) is compared with an autostereoscopic viewing system at right. Source: Samsung

Optics: A pair of lenses that augments the eyes, allowing them to converge the images, rendering something like the image in Fig. 5. The lenses converge and correct distortions so the brain perceives images with a sense of depth.

Fig. 5: Stereoscopic systems show two images to users. The images converge to create the perception of depth. Source: Oculus

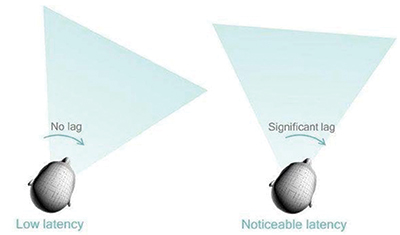

Motion Tracking: The art of tricking the brain to believe it is in another place by tracking movements of the head (Fig. 6) and updating the rendered scene without any lag.

Fig. 6: Latency is an important consideration for motion-tracking systems. Source: OLED-A

Array of Sensors, Such as Gyroscopes, Accelerometers, etc.: These are used to track the movement of the head. The tracking data is passed to the computing platform to update the rendered images accordingly. Apart from head tracking, advanced VR/AR systems targeting for better immersion also track the position of the user in the real world.

The head-mounted display enables the user to view superimposed graphics and system-created text or animation. Two head-mount design concepts are being researched – video see-through systems and optical see-through systems. The video see-through systems block out the user’s view of the outside environment and play the image in real time through a camera mounted on the headgear. Latency in image adjustment whenever the user moves his head is an issue. Optical see-through systems, on the other hand, make use of technology that “paints” the images directly onto the user’s retina through rapid movement of the light source. Cost is an issue, but researchers believe this approach is more portable and inconspicuous, holding more promise for future augmented-reality systems.

Tracking and Orientation: The tracking and orientation system includes GPS to pinpoint the user’s location in reference to his surroundings and additionally tracks the user’s eye and head movements. Major hurdles to developing this technology include the complicated procedures of tracking overall location and user movement, and adjusting the displayed graphics accordingly. The best systems developed still suffer from latency between the user’s movement and the display of the image.

Computing Platform: Available mobile computers used for this new technology are still not sufficiently powerful to create the needed stereo 3D graphics. Graphics processing units like the NVidia GPU by Toshiba and ATI Mobility 128 16MB-graphics chips are, however, being integrated into laptops to merge current computer technology with augmented-reality systems.

AR in Action

For a prime example of how AR could ideally function, we need go no further than a product built years ago by Lockheed for fighter pilots: helmets with amazing capabilities (Fig. 7)! These helmets, specially designed for the US military, allow fighter jet pilots to “see through their planes,” eliminating blind spots and giving pilots an extra edge. The headgear, which is specifically used by pilots flying the Lockheed Martin F-35 Lightning II, displays images from six cameras mounted throughout the fighter, providing the pilot with a good look at the outside world without the need for extra equipment. An array of lights on the back of the Lightning II help guide a head-tracking system that keeps projected information in close sync with head movements; a pilot caught in a dogfight doesn’t have to wait crucial moments for flight information to drift into view. The images that are projected in the helmet’s screen correspond to points in the planes the pilots would typically not be able to see, like the sky behind them. A pilot “can look between his legs and can see straight to the ground” instead of the plane’s floor, test pilot Billie Flynn said, noting the helmet gives the pilot “situational awareness.”7 The helmets take all the information that is typically displayed on various screens, including speed and altitude readings, and bring it inside the mask, Flynn said. Of course, the consumer isn’t seeking a big helmet, six cameras, oxygen, and a $400,000 price tag.

Fig. 7: The F-35 Lightning II helmet offers powerful AR capabilities but isn’t feasible for the consumer marketplace. Source: Lockheed Martin

The Display Industry Tackles Immersiveness

The challenges to reaching total immersive experiences are huge, but the display industry appears to be up to its portion of the task. There are two display technologies vying for this market: LCDs and OLEDs, both in direct view and utilizing projection optics. The characteristics of these displays are shown in Table 1.

| Table 1: Current Maximum Performance Spec Comparison. Source: OLED-A |

| |

|

Direct View |

Micro Display |

| Characteristic |

Units |

OLED |

LCD |

Micro OLED |

LCOS |

| Pixel Density |

(ppi) |

~1000 |

~1000 |

~2000 |

~2000` |

| Pixel Pitch |

μm |

25 |

25 |

10 |

10 |

| Response Time |

millisec |

0.1 |

5 |

0.1 |

5 |

| Contrast Ratio |

|

∞ |

1500:1 |

∞ |

1500:1 |

| Luminance |

cd/m2 |

1200 |

2500 |

2000 |

2500 |

| Backplane |

|

p-Si |

p-Si |

c-Si |

c-Si |

| Mobility |

cm2/vsec |

~100 |

~100` |

~1,000 |

~1,000 |

| Optimal Viewing Distance |

inches |

1.6 |

1.6 |

0.8 |

0.8 |

LCOS = liquid crystal on silicon

OLEDs are the technology of choice for VR due to their advantages in latency, contrast ratio, response time, and black levels. They are used in all the popular VR headsets (Vive, Rift, and PlayStation VR). It is assumed that since AR requires high luminance (it operates in any ambient condition), liquid-crystal-on-silicon (LCOS) through a projection prism optic would be the best display medium for AR. But due to improvements in material lifetimes, transparent direct-view OLEDs can operate at >1,000 nits and are suitable for AR as well.

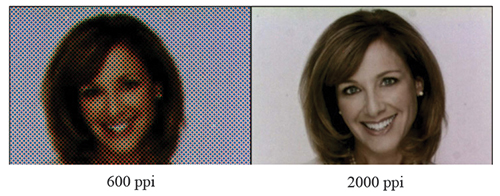

The challenge is how to make displays that provide comfort for near-to-eye use, have a wide field of view (FOV), support 3D, and operate in any ambient environment – dark or bright. The use of microdisplays helps because of the smaller pixels and higher ppi, which eliminate the screen-door effect caused by insufficient resolution for the distance between the eye and the display (Fig. 8).

Fig. 8: The “screen-door effect” with lower resolution appears at left. Source: eMagin

In search of such a display, the author went to Newton, MA, just outside of Boston to visit Kopin, a small public company. Kopin is a worldwide leader in LCOS and recently announced a new OLED microdisplay. The company is well positioned in the AR industry, as it is the primary supplier of microdisplays for Google’s Glass and provides the LCOS displays for the F-35 Lightning II helmets. Dr. John Fan, CEO of Kopin, explained that if the helmets were designed today, he would probably use OLED microdisplays due to their fast response time and higher contrast. Kopin believes that in order to attract the consumer and thereby reach the billions of units/year, a successful AR product must:

• Be smaller and more like glasses than current headsets

• Have higher resolution – probably 3K to eliminate the screen-door effect of current displays

• Support fast refresh rate and response time to eliminate any latency – 90Hz to 120Hz with μs response

• Be untethered from a high-end PC. The smartphone would be a good substitute, but graphics processors need to reach to the next level of performance to handle the bandwidth

• Support luminance >1,000 nits to handle all the artifacts, even in a partially light-controlled environment

• Include built-in motion controller, eye trackers, and other accessories

• Incorporate batteries designed with flexible substrates and possessing double the current capacity

I also went to Hopewell, NY, to visit an even smaller public company, eMagin. According to eMagin CEO Andrew Sculley, immersive AR needs a display with full-color high luminance, high contrast, high pixel density for wide FoV, high speed, and capabilities like global shutter and low persistence. eMagin believes OLED is preferable to LCoS in this case because it supports the contrast levels needed for AR, has faster response times, and can reach pixel densities of over 2,000 ppi with luminance levels above 5,000 nits. (eMagin demonstrated a 5,000-nit display with over 2,600 ppi at Display Week in 2017.) According to eMagin, it is the only company that has shown direct-patterned OLED with pixel pitches above 2,000 ppi and is the only US company that manufactures OLED microdisplays. Its direct-patterned displays are bright because they don’t have color filters, which block two thirds of the light by design, and because they use more efficient OLED stacks for each color. One example of AR is in aviation. eMagin is now in a major helicopter program and is in qualification for a multi-service fixed-wing aircraft program.

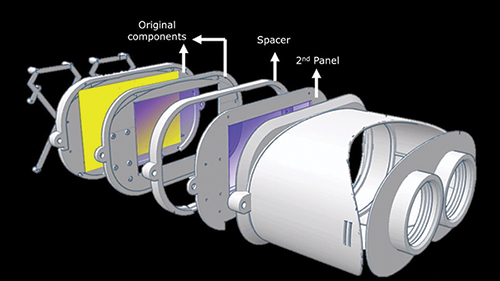

Fig. 9: eMagin’s 2K × 2K OLED-based micro display allows the design of more streamlined headsets than standard direct-view ones. Source: eMagin

Further Opportunities

Related AR solutions include light-field displays and holograms. A hologram is a photographic recording of a light field, rather than of an image formed by a lens, and is used to display a fully 3D image of a “holographed” subject. However, in these types of designs, a basic imaging device such as an OLED or LCD is still necessary as shown in Fig. 10 (which uses an LCD but could also use an OLED).

Fig. 10: This light-field headset includes an embedded LCD. Source: Stanford Labs

Perhaps the most highly publicized startup ever, Magic Leap, a company with a >$US 2 billion valuation before it even had a product and that Wired called “the World’s Most Secretive Startup,” recently announced an AR product to be released in 2018 as shown in Fig. 11. The design is so secret that the display technology – OLED, LCD, or something else – has not been announced.

Fig. 11: The Magic Leap AR headset has been much discussed, but isn’t yet widely understood. Source: Magic Leap

Brave New World

The chasm between where we are today and where we need to be to achieve the lofty goals of immersion can be understood by comparing the VR/AR experiences we can achieve today and what’s needed to create experience-simulation systems like those depicted in the movies Inception or The Matrix. To achieve true, reality-like immersive experiences, the human body must be modified, and computers connected directly to the brain. It won’t happen until we can integrate prosthetic eyes/ears/limbs with the nervous system, something that is still very much in the experimental stage. Devices and signal transceivers have to be surgically implanted. A responsive, Matrix-like simulation with the resolution or detail needed to completely fool the human brain is well beyond the reach of today’s hardware and software. Ray Kurzweil, in his book The Singularity Is Near, projects these capabilities around 2035, less than 20 years from now.

References

1J. Lazauskas, “How Virtual Reality Will Change Life in the Workplace,” Fortune, 2014.

2“Worldwide Shipments of Augmented Reality and Virtual Reality Headsets Expected to Grow at 58% CAGR with Low-Cost Smartphone VR Devices Being Short-Term Catalyst,” IDC, June 2017.

3vrzone.com

4www.gq.com/story/burning-man-sam-brown-jay-kirk-gq-february-2012

5https://today.duke.edu/2016/08/paraplegics-take-step-regain-movement

6www.ge.com/reports/smart-specs-ok-glass-fix-jet-engine/

7https://www.f35.com/about/capabilities/helmet •

Barry Young is CEO of the OLED Association. He can be reached at barry@oled-a.org.