Microdisplays, Near-to-Eye, and 3D

Microdisplays, Near-to-Eye, and 3D

New display technologies, including some new twists on tried-and-true display technologies, are helping displays integrate ever more seamlessly with the

devices we use every day.

by Steve Sechrist

AT THIS YEAR’S Display Week in San Jose, California, we saw a growing renaissance of some tried-and-true display technologies – including and especially new-use models for microdisplays in both the consumer-wearable and automotive markets, the latter in the form of head-up displays (HUDs). These use models included applications created by size and weight breakthroughs and lower power requirements, and enhanced, in part, by new semiconductor material compounds – all discussed below. This technology trend even goes beyond displays, with some microdisplay companies now targeting industrial optical inspection and sensing in

very-high-tolerance manufacturing. Some companies are now obtaining more than half of their revenue from non-display-related applications.

Near-to-eye (NTE) and 3D displays are also experiencing a resurgence, particularly when empowered by eye-tracking sensors and algorithms that boost system understanding of “user intent.” These technologies are being used to help generate autostereoscopic 3D solutions and light-field holographic displays that begin to push the boundaries of current display capabilities.

Meanwhile, in the HUD space, we discovered new film technologies that transform simple glass (in the car and elsewhere) into next-generation displays that,

when combined with the latest sensor technology, can bring to reality visions of a display future only dreamed about in sci-fi film and literature just a few

short years back. It’s exciting to see these older technologies rising again with some new twists.

NTE Technology for Wearables

Wearables are one of the fastest growing markets in the microdisplay category. This fact is not lost on headset-maker Kopin, a company that was in the

wearable-display business before it was even called that (see Fig. 1) for some examples of its applications for various devices). Kopin’s Dr. Ernesto Martinez-Villalpando presented at Display Week’s IHS-sponsored Business Conference, explaining how augmenting the human visual system with HUD or NTE devices offers the opportunity to move beyond simple data interfaces such as display monitors or smartphones. Much like bionic prosthetic limbs, HUD and NTE technology begin to address the possibility of true augmentation.

Fig. 1: Kopin demonstrated a table-top of display applications for its small display components.

As an example, Martinez outlined the design goals of Kopin’s “Pupil” display module. These include size, weight, battery life, and display resolution. The technology empowers augmented-reality applications that have already proven valuable in supporting and documenting complex service and maintenance

operations in the field. For instance, rather than carrying a thick operations manual to a tower antenna or wind turbine needing maintenance, service personnel can call up specific operational procedures with the added benefit of documenting the maintenance that took place. Beyond B2B, other applications include enhanced situational awareness and even the ability to see through buildings to know what is on the next street – the next-best thing to X-ray vision.

We see the Kopin NTE device as a technology milestone in the space developed by other leaders in the field, including Google, with its Glass prototype project

that pushed the limits of wearable (OK, “geeky”) technology and Apple, with its “taptic” version of a haptic feedback engine that notifies users with a slight tap on the wrist. What will be interesting in this space is just how we begin to adapt and take advantage of new sensory input that moves us beyond the audio and visual cues we have previously relied on.

At the same conference, Margaret Kohin, Senior VP of eMagin, said her company was looking to use its emissive OLED-XL (on silicon) microdisplay technology

with resolutions as high as 1920 × 1200 pixels to bridge the gap between the consumer space and military applications in HUDs. She said eMagin’s consumer applications initiative has been in place since late 2014. The latest advance in this area is a 4-Mpixel OLED microdisplay that offers a luminance of 6500 cd/m2 and a 90° FOV (field of view) while delivering greater than 75% color gamut with 85% uniformity. Sizes range from 0.86 in. (WUXGA) to 0.61 in. (SVGA). There is even a 15-µm VGA version that weighs less than 2 grams.

Kohin also made the point that the mid-term wish list for AR and VR markets is a very good fit for OLED microdisplays. She said the wish list includes benchmarks such as high luminance in the 20,000–30,000 cd/m2 range and high contrast (true black), low power consumption (OLEDs require no backlight), and small form factor.

If the Google Glass pull-back has discouraged some companies in the consumer NTE industry, eMagin is not among them. At Display Week, Kohin was clearly bullish on the space and said she believes that along with continued B2B and government clients, the consumer space is ready to move.

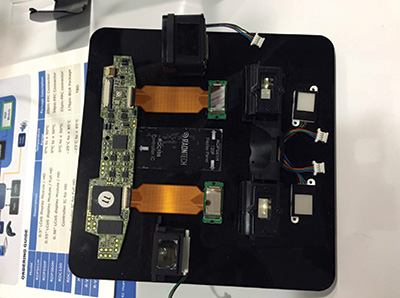

Meanwhile, in the exhibit hall at this year’s Display Week, Greg Truman, CEO of ForthDD (now part of Kopin), talked to us about the company’s high-resolution LCoS microdisplay business (Fig. 2) and the applications opening up in the non-display space. One example of the latter is in spatial light modulation, with applications for its liquid-crystal–on–silicon (LCoS) chips in QXGA resolutions used for automated optical inspection equipment. “The big win comes in improved accuracy on the production line,” Truman told us, “in what is now a cubic micron accuracy business.” While the NTE business is still contributing up to 50% of Kopin’s revenue, the new field of automated optical inspection is helping diversify the business, lowering dependence on the display market alone. This technology is now empowering machine vision with a highly discrete ability to “see” flaws in solder or other assembly operations. This goes well beyond the visual acuity of human inspection.

Fig. 2: These ForthDD high-resolution microdisplays shown at Display Week are now used in machine-vision projects well as high-end viewfinders.

Parent company Kopin has a long history of making HUDs for pilots, and the firm supplies display components including the complete optics package, driver software, a software development kit, and test and development platforms for its military and B2B customers, which include Thales, Elbit, and Rockwell Collins.

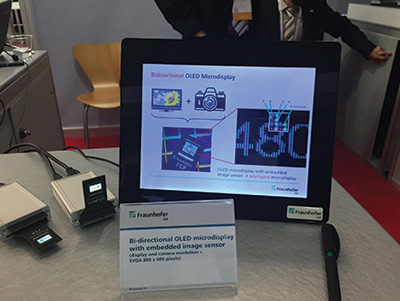

Elsewhere in the exhibit hall, another microdisplay designer, Fraunhofer Institute for Organic Electronics, showed off its new full-color SVGA bi-directional microdisplay OLED (Fig. 3) that serves double duty as both a display and eye-tracking scanner. An embedded image sensor is used to track eye movements with algorithms designed to target the center of the pupil. This greatly enhances the system’s ability to discern user intent, offering some huge benefits in head-worn mobile personal electronic devices. The design includes a four-color (RGBW) pixel arrangement that adds an embedded photo-diode image sensor used to detect light. The display is based on a 0.18-µm CMOS process chip that delivers an SVGA resolution display with a luminance of 250 cd/m2. This idea of embedding sensors into the microdisplay to enhance the overall user experience is encouraging and will likely continue. Early applications include video or data goggles, augmented-reality eye wear, and even machine-to-machine applications. Once the resolution is enhanced beyond 720p, Fraunhofer anticipates suitability for biometric and security applications that can benefit from discrete iris detection algorithms, but there is more fundamental chip development work to be done before those become a reality.

Fig. 3: Fraunhofer’s bi-directional OLED microdisplay appears in the bottom left side of this

image.

Participating in Display Week’s Innovation Zone this year was Korea-based Raontech, demonstrating a new 720p HD LCoS microdisplay module (0.5 in., 1280 × 720 pixels) in a super-compact 8 mm × 8 mm optical package using an LED light source (Fig. 4). This was shown with applications proposed for automotive HUDs, wearable smart glasses, and pico-projectors moving to HD. Dual-display (two-eye) support for display goggles was also on the Raontech product offering list. We were told a full-HD version is on the design roadmap.

Fig. 4: This Raontech working prototype board shows wearable LCoS MD modules.

In a technical session devoted to micro-displays, Dr. Brian Tull from Lumiode, a Columbia University start-up company, presented a paper titled “High Brightness Emissive Microdisplay by Integration of III-V LEDs with Thin Film Silicon Transistors.” He also showed the company’s next-generation microdisplay, a photolithographically pixelated LED (think LED-based digital signs shrunk down to microdisplay size.) The Lumiode team uses a type III-V semiconductor compound material that creates an emissive microdisplay using TFTs that act as both the light source and the image component. Creating a high-brightness emissive LED microdisplay using TFTs that is based on proven methods used in both LCD glass substrates and OLED display manufacturing represents a significant technological achievement. According to the company, “Our transistor process flow follows a conventional thin-film process with several modifications to ensure process compatibility with LED epitaxial wafers.” The result is a monolithically integrated thin-film device using standard GaN-based LEDs from a combined process flow.

Tull claims several advantages for his modified conventional thin-film process over traditional liquid-crystal or micromirror devices, and even over “low light” emitting OLED-based microdisplays. He believes that LEDs are the best choice for miniaturization of wearable applications as they offer significant advantages in the most important display metrics; a luminance of 20 × 106 cd/m2, the highest efficacy (100 lm/W), and the most robust lifetimes (50 khours and up). This new approach using type-III and type-V compound silicon has the potential to shift the direction of small-display technology, perhaps for generations to come.

3D Moves to Eye Tracking at SID

Using eye tracking, along with software, core display hardware, and a complex set of optics, a company called SuperD that is based in Shenzhen, China, has

developed a second-screen mobile-display monitor it calls 3D Box. The “Box” shows 2D content from smartphones or tablets in autostereoscopic 3D via a

wireless connection with the help of its eye-tracking software.

Content in 2D and user (via touch) control are provided through a wirelessly connected mobile device for, say, mobile game interaction that can be made

viewable on the SuperD display in autostereoscopic 3D. Previous versions of this technology surfaced as far back as 2011, using a laptop screen for input, but recent eye-tracking improvements have made the 3D effect much more compelling. This is because when the eye position is known, the image can be rendered in 3D

in real time by sending the 3D pixel data through a lens located on the device’s LCD panel. This autostereo content is then reflected to the user’s eyes.

SuperD also sponsored three papers at the Display Week symposium, including work on a polarizer-free LCD lens and highlights of a study on the relationship

between driving voltage and cell gap in a two-voltage driving structure. In the poster session, SuperD also showed contrast enhancement using an electrically tunable LC lens performing a focusing function by electrically varying the focal length to achieve contrast. The advantage is no change to image magnification due to focusing, so contrast is enhanced merely by controlling focusing and defocusing of images through simple arithmetic operations.

Another 3D technology found in the I-Zone came from Polarscreens, Inc., from Quebec, Canada. This company also makes use of advanced eye tracking by providing full-resolution stereo vision without 3D glasses, goggles, or other worn apparatus by using a camera-based eye-tracking system. It tracks both the head and eyes to create what it calls “eye gaze data” using motion prediction algorithms. Eye rotation speed and the point of eye focus are calculated from this gaze data and then used to create the 3D effect.

Here, a stereoscopic video is constructed using three fields (one common and two alternated parallax barriers.) They are displayed in sequence but at different times; consequently, each field does not register on the eye’s retina at the same location due to eye rotation between each field. Eye tracking and head tracking are used to determine eye rotation speed in both the x and y directions, and that data is used to shift the video content of each parallax-barrier field to match the rotation speed, reconstructing a perfect image on the user’s retina.

In essence, a computer-generated image is rendered based on the user’s viewpoint using two virtual cameras and the motion-prediction information, re-aligning each parallax field’s video content with the common field at the user’s retina. It works for both still images and 3D objects and video.

One other benefit from this approach, since the object of focus is known by the system, is that the data can also be used to improve the sharpness of the fixed

object while blurring the background data, creating a depth-of-field illusion that is quite compelling. The FOV ranges from just 6 in. to a whopping 7 ft., the group said. Eye tracking is disengaged if the head tilts out of range or 2D content is selected for display. This technology was originally developed to counteract the effect of eye rotation during virtual-reality sessions in glasses-free autostereoscopic systems.

A Light-Field-Display Approach to 3D

Zebra Imaging was in the I-Zone with a holographic light-field 3D display with a self-contained real-time spatial 3D generator device incorporating a table-top

display that it calls the ZScape. On display were applications in simulation awareness and “visitation,” so the group was clearly targeting the military but was also able to create dynamic and interactive images from diverse data sources including LIDAR, CAD, biometric, and bathymetric (ocean topography) – all in real time. This is a full-color auto-viewable (no special eye wear) table-top display offering compatibility with most common software platforms as well as

interactivity with off-the-shelf peripherals (gesture, 3D tracked wands and gloves, multi-touch, and gaming devices including pointers.)

The images are made from an array of “hogels” or holographic elements, created in the light-field display. According to Zebra Imaging’s I-Zone application: “The display plane is modeled as a 2D array of microlenses that correspond to camera positions on the display surface, defining a mathematical model of the physical emission surface of the display in model space. Hogels are computed at the center of every microlens from the perspective of the holographic-display plane. 3D operations such as pan, scale, zoom, tilt, and rotate are accomplished by transforming the modeled display plane through the scene’s model space. Thus, the modeled display plane becomes a window into the 3D scene which translates into the projected 3D light-field visualization.” My personal impression is the technology is yet one more milestone in movingtoward holographic displays with some useful (even critical) military or security applications. For example, it can provide vital, real-time data to planners, decision makers, and perhaps even for medical apps. That said, we are still a bit far away from consumer-level displays of this type.

University of Seoul 3D Table-Top

Another table-top 3D display in the I-Zone was from the University of Seoul’s Human Media Research Center in Kwangwoon, Korea. This immersive table-top 3D display system is called “HoloDigilog.” It is a modified conventional direct-view system that cleverly uses sub-viewing zones and a lenslet array and light-field technology, and a QXGA display (3840 × 2160 pixel resolution) flat panel as the base (floor of the hologram if you will.) It allows for multiple viewing of a 3D image that can be projected on to the 23.8-in.-diagonal table-top panel. It looks surprisingly good for a table-top 3D display. The group said it had (but did not bring) a 20- and 30-in. version of the display in Korea as well.

HoloDigilog consists of a four-part system that includes (1) a real-time pick-up of a 3D scene by capturing its intensity and depth images, (2) depth compensation used to co-ordinate between the pick-up and table-top display, (3) an elemental image array (ELA) that is created through image processing using compensated intensity and depth mapping, and (4) the EIA displayed on the immersive table-top 3D display system. Total “boxels” in the existing system are limited to 300 × 200 × 256 vertical and to increase the object projected, an even higher-resolution panel is required. There is also an optical projection layer (patent pending,

so few details were given.)

On the application side, the group mentioned a table-top display for sporting events, etc., but creating content for this display, then mass distribution, may still be a long way off. Even a simple application like the one shown in Star Wars, a “Princess Leia” version of FaceTime with 360° viewing for the entire family to see, would be an awesome “killer app.” But don’t mind me – I’m just dreaming here. The Korea-based group said it is possible to do real-time streaming content, but that it was highly processing intensive. In short, this technology is still in its very early days, but, that said, it is exciting to see the progress.

HUD Wavelength-Selective Excitation

Sun Innovations was at Display Week in the exhibit hall with a full-windshield HUD system that renders objects in color using a novel emissive projection display (EPD). Remarkably, images in multiple wavebands between 360 and 460 nm will be projected onto a windshield coated with Sun’s fully transparent emissive films using a UV light projector. The process is called projective excitation and uses a laser or LED-based HUD projector for what company namesake and founder Ted Sun calls wavelength-selective excitation (WSE).

On the materials side, specially treated color-sensitive films are made in optically clear sheets <50 µm and stacked in an RGB configuration (Fig. 5).

Fig. 5: Sun Innovations creates color-sensitive RGB films and excites them with a projector to create full-windshield HUDs.

This luminescent material is added to standard automotive glass with polyvinyl butyral (PVB) based resin films embedded (used to create shatterproof windscreen glass in cars). Sun stacks these color-sensitive RGB films and excites them using a display engine powered with a UV light source, each film with distinctive absorption and emission characteristics to excite the red, green, or blue layer. Sun insists there is no extra coating step or added change to the primary manufacturing process used to create the film. Best yet, Sun said these films can be produced in a roll-to-roll process that is low cost and haze-free (no pixel structures to interfere with light transmissivity). If this sounds like the Holy Grail for the automotive display industry, we agree.

For automotive applications, Sun combines this material with a HUD projector based on blue-ray laser and x-y laser image scanners. The group is working on a separately designed palm-sized full-windscreen HUD projector with integrated control and interface boards to speed adoption and design flexibility. In principle, the projector encodes the original color image into three excitation wavebands, which excites the corresponding film layer, generating the RGB. The trick is to do this without interfering with the excitation or emission from other two layers. In full implementation, this system can display information on any window in the

car. Other horizon milestones include embedded sensor integration, touch and gesture control, and voice commands for hands free operation.

Display Evolution Empowers a New Wave of Devices

So there you have it, a primer on microdisplays, NTEs, and 3D displays at this summer’s Display Week. And while we see these examples of new film-based displays and table-top holograms are still a bit far out, it is exciting to see what may be the beginnings of our future car, window, living room, and mobile experiences, as seen on the Display Week show floor. Some milestone NTE technologies have finally reached size, weight, and battery-life thresholds that will empower the next wave of wearable AR and VR devices. These will push us toward an ever-tighter integration of virtual and augmented reality with real life. In addition, displays are becoming ubiquitous, as sensors and microprocessors did before them. Displays that provide easy access to information are becoming the norm in

virtually all parts of our lives. Look for displays to melt into the background, only to emerge when needed and perhaps when anticipated by the smart objects all around us. Until that time, the industry continues to work, integrate, and iterate toward fulfilling our dreams of ever more efficient and seamless devices. ⁊

Steve Sechrist is a display-industry analyst and contributing editor to Information Display

magazine. He can be reached at sechrist@ucla.edu or by cell at 503/704-2578.