True Color: The Road to Better Front-of-Screen Performance

True Color: The Road to Better Front-of-Screen Performance

The authors have created an architecture that optimizes image processing across a complex display ecosystem, including smartphones, tablets, and desktop panels.

by Stefan Peana and Jim Sullivan

IN a race to win customers, manufacturers are bringing high-resolution displays to market with the message that more pixels per inch equal premium image quality. While focusing on a single performance measure such as resolution may come across as a sensible way to market display quality, it does not serve as a sufficient means to achieving superior front-of-screen performance. That is a complex process involving numerous elements that include environmental conditions, system requirements, user preferences, and the mapping of input to output images as well as optimizing multiple display-performance parameters – resolution, color, brightness, contrast – all working together in real time.

This article presents an architecture that optimizes image processing across a complex display ecosystem such as a smartphone, tablet, or desktop. The architecture leverages a mathematical model that enables color expansion to produce a brighter and more vibrant image output with superior front-of-screen performance.

Improved Display Performance Is a Game Changer

The rapid market adoption of high-resolution displays indicates consumers’ desire for better front-of-screen performance. Manufacturers are working to define new display categories and features – streaming media content optimization, wider-color-gamut capability for gaming and rendered content, greater dynamic range to support greater flexibility of artistic intent – all in order to win new customers. This recent trend represents a shift from the previous generation of computing products that were designed and optimized for total system performance (i.e., size, weight, cost, battery life, etc.), in which displays were deemed sufficient if usable for applications such as Word, Excel, and PowerPoint. Display functionality kept pace with product needs and stayed in line with display technology development.

The importance of improved display performance in the latest devices tracks the rise of use of smartphones and social media for mass communication. A brilliant and vivid display is the face of computing products. As previously mentioned, smartphone and computer makers promote improved display resolution or brightness as competitive differentiators.1 In the absence of a display front-of-screen performance standard, such promotions have proven successful.

Strides in Color Design

Several industry leaders have developed design elements to enhance front-of-screen performance. Dolby, a forerunner in the theater viewing market, has made a compelling argument to pair high-definition resolution with wider color gamut as an improved approach to image quality.2 A wider color gamut is delivered in the company of high-dynamic-range (HDR) input content, metadata encoded with the content characteristics, and multi-zone backlight control as the critical elements of how Dolby improves display images. Dolby’s work is most suitable for television and difficult to realize in mobile devices because of product form factors such as size and configuration as well as battery-life limitations.

Technicolor has created a color-certification process in partnership with Portrait Displays to guarantee color quality on any computer or mobile device3. The primary focus of color certification is to eliminate variation in color performance across a multitude of display products. For example, a consumer may not see a correct representation of product color on a shopping Website and decide to return the merchandise at a reseller’s expense. Instances such as these are a major challenge in the context of today’s portable products. A user who expects to acquire, share, and view images across multiple devices is often frustrated by the difficulty of maintaining visual consistency in a complex ecosystem.

J. F. Schumacher et al.4 developed a perceptual quality metric as a predictive measure of image quality rather than a measure of image fidelity rendered by the display. (See “PQM: A Quantitative Tool for Evaluating Decisions in Display Design” in the May/June 2013 issue of Information Display.) A change in display specifications alters the quality of the image; the measure provides a single metric to detect this improvement. Display makers can use this metric to make design choices across multiple specifications, improving user perception of the display. Nonetheless, technical focus of this metric on its own will not lead to the best visual experience.

Manufacturers of timing controllers offer a built-in color-management engine that uses simple one-dimensional color corrections to modify display color. These color-management solutions tend to be specific to a particular display and its timing controller. As a result, manufacturers such as Dell who source multiple display vendors for the same platform are unable to deliver consistent visual performance across all manufacturing builds.

Other companies have developed hardware solutions located between the GPU output and display input, converting images using proprietary algorithms.5,6 The centerpiece of these solutions is a video-display-processor IC, which handles various input and output protocols, manages and buffers images, and performs high-speed processing for most display resolutions. While offering compelling display-performance capability, these intermediate hardware solutions duplicate existing GPU and timing-controller capability, impacting product size and cost.

An Innovative Architecture for Optimal Display Front-of-Screen Performance

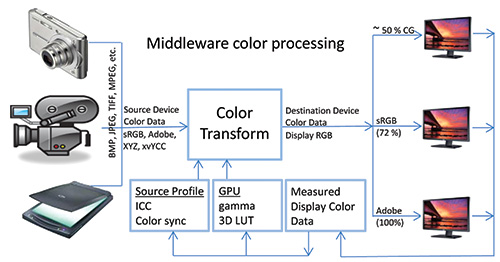

Optimizing display front-of-screen performance requires a new architecture that consists of multiple elements working together. The most complex element of this architecture is the color-processing algorithm, which manages multiple color transformations while factoring in various input variables and user preferences under diverging viewing environments. The algorithm must be flexible, expandable, and capable of processing large data with the ability to maximize the visual experience of any display. It must also be able to distinguish between skin tones and non-skin-tone objects for independent processing. Moreover, the algorithm must manage the input-to-output mapping, identifying the need to compress or expand the input image relative to the output display device capability as illustrated by Fig. 1.

Fig. 1: The above schematic outlines a color-mapping workflow for multi-sourced input and destination output.

During the image compression or expansion from one color space to another, preserving the original location of colors is challenging and requires transform functions that map the image from input device color space to output device color space. Running each image through the transform function requires hardware overhead, which can be power demanding and slow moving.

sRGB color space, used almost exclusively nowadays to represent color, may prove ineffective during expansion as it cannot preserve color accuracy, particularly

in reds and blues. In the sRGB color space, if blue is expanded to be more colorful, it can become purple. Additionally, expanding color using sRGB adversely affects other elements such as skin tones, gray tones, white points, environmental illumination, and color temperature, as they increase at different magnitudes.

In 2011, Rodney Heckaman7 developed an algorithm to achieve brighter and more vibrant colors during color expansion, realizing a richer visual experience. The algorithm involves a sigmoidal function that isolates colors and the IPT color space to identify planes of constant hue for a particular display at a given luminance. Yang Xue describes the IPT color space in his graduate thesis, “Uniform Color Spaces Based on CIECAM02 and IPT Color Differences,” paragraph 2.1.8 Identifying and rendering specific colors independently (green grass, blue sky, purple flowers) maximizes display-gamut potential while maintaining memory

colors (skin tone, etc.) to their original intent. The algorithm detects the input color values of each object type and uses selected color preferences for those objects to produce the chosen output object colors for just those objects, independent of the other colors that are processed to boost color to compensate for color losses in poor lighting. This results in larger display gamut utilization and an expanded image output containing memory colors that are identified and maintained to their original intent as well as independently rendering secondary colors. Building on Heckaman’s work, Jim Sullivan of Entertainment Experience9 has developed a model to improve the overall visual quality of color displays using IPT color space to convert to RGB while preserving input color, hue, and brightness.

Look-Up Tables

Leveraging Heckaman’s algorithm and Sullivan’s model, our research team at Dell has co-developed an improved approach to achieving superior front-of-screen performance without compromising processing limitations. This approach involves creating a library of 3D Look-Up Tables (LUTs), which cover RGB to IPT color-space transformations that can be easily accessed and implemented. The IPT color space includes both environmental illumination and color temperature.

Using the environmental inputs, we set the P and T to zero, which defines the white point based on the viewer; all colors then adjust relative to white. Expanding a data point proportionally on the T axis saturates the environmental brightness and sets the desired saturation level. If the data-point color needs to change, it shifts to the desired color direction, selected from the appropriate 3D LUT. For example, shifting skin tone to warmer moves the data point toward yellow, progressing toward the higher-T positive direction in the IPT color space. For a lighter skin tone, color moves toward the negative in the blue direction.

Skin tone is color specific and easily identifiable; therefore, it can be differentially expanded.

The IPT color-space data points also expand proportionally to the amount of color loss in brighter light. Blues and greens do not lose as much color as reds and yellows; consequently, reds and yellows expand more when the environmental light is brighter. As a result, unequal expansion must be considered. Similarly, uneven compensation is applied for shadows and highlights where the color of the brighter area expands more than for shadows.

Several notebook systems have recently been released with Dell’s True Color software, which optimizes display color output by factoring in environmental conditions to make images appear as if in real life. True Color software also performs one-to-one image input to output color mapping and expand-maps the input image to a wider display gamut, making the output image appear more colorful. The True Color software is ideal for products with smaller color gamuts, such as

computers and mobile devices, especially when used under a variety of environmental viewing conditions, or by the new generation of wide-color-gamut displays where the accuracy of color remapping is critical. The product of the software is a front-of-screen image true to the artistic intent of the creator.

True Color Architecture

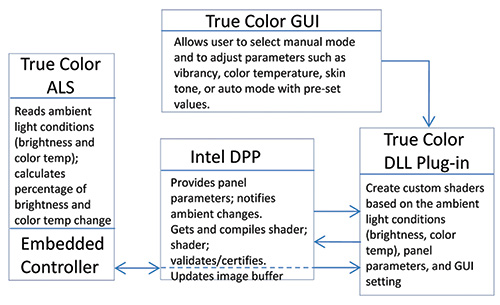

True Color architecture, depicted in Fig. 2, is composed of three functional elements (ALS, DPP, and DLL), as well as True Color software and GUI. A True Color Ambient Light Sensor (ALS) is a sensor that responds to ambient brightness and color-temperature events. It filters the ambient light events to control the rate of change and interacts with True Color DLL through the Intel DPP to communicate ambient-light changes.

Fig. 2: The True Color high-level architecture incorporates three functional elements (ALS, DPP, and DLL), the True Color software, and a GUI.

The Intel Driver Post-Processing (DPP) is a component of the Intel Display Driver, which supports True Color functionality. The DPP accepts the pixel shaders and associated parameters from the True Color DLL and uses these parameters to transform the image to be displayed. The new image is placed on the display buffer and then pushed to the display. The True Color Dynamic Link Library (DLL) is a plug-in loaded by the Intel DPP that receives the environmental brightness and color temperature values to create custom shades, which provide ambient light compensation using color boost and white-point adjustment. The interface lets the user interact with the True Color application settings through simple graphical icons and visual sliders.

The process starts with the True Color ALS reading the environmental illumination and color temperature, then passing the data to the embedded controller for processing. When the input data falls outside the preset ranges, the embedded controller notifies the Intel DPP via an API. The new data triggers the selection of a new 3D LUT from the library to change the color saturation and white point based on the environmental conditions. The Intel DPP requests new custom shaders from the True Color DLL based on the environmental input and the interface setting. The shaders are generated by averaging values in a maximum of four tables from a set of 16 tables that covers four light levels and four color temperatures. Once the image to be displayed is adjusted, it is placed on the display buffer and then pushed to the display.

True Color Software

The True Color software generates the 3D LUTs using display-measured data of the white and primary colors at various brightness levels and four different environmental illuminations (dark, dim, bright, and extra bright) and four color temperatures (incandescent, fluorescent, D50, and D65).

The software either automatically or manually sets the degree of expansion, also referred to as the boost level, which is always maximum-bounded by the display color-gamut capability. The maximum boost possible is defined as the outside edges of the display color volume calculated using the display color primaries and the white brightness, so the boost “knows” how far it can expand to stay in gamut in all directions in the color space.

The sigmoidal function is replaced by a nested formula that contains a set of inter-dependent factors managing the percentage of color boost, the magnitude of skin enhancement, the display color gamut, the gamma level, and the white-point management. The True Color algorithm uses these factors, along with user presets, to

interpret the input image and map out the new display image.

The True Color Interface

The True Color interface set-up features two primary modes:

Manual Mode: The user can adjust display settings by selecting vibrancy level, color temperature setting, and flesh-tone enhancement. The user uses the vibrancy slide to adjust the color boost from minimum, normally used for dark ambient lighting or to maximum for brighter lighting. Typical manual mode features may not include an ambient-light sensor; therefore, the user can select color temperature by moving the slider from left to right – incandescent to fluorescent. Skin-tone settings can be varied from no flesh to maximum flesh enhancement by moving the slider from normal to warm. Manual mode trumps all presets as the user chooses to optimize all settings.

Auto Mode: Factory pre-set; the auto mode features an integrated ambient-light sensor to enable auto selection of 3D LUTs that produce color saturation and color white-point changes to accommodate the user’s environmental lighting.

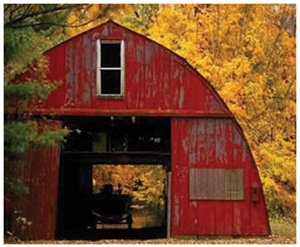

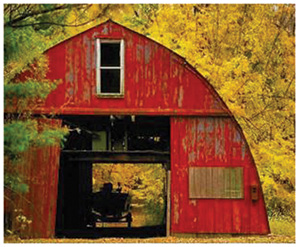

System Demonstrations

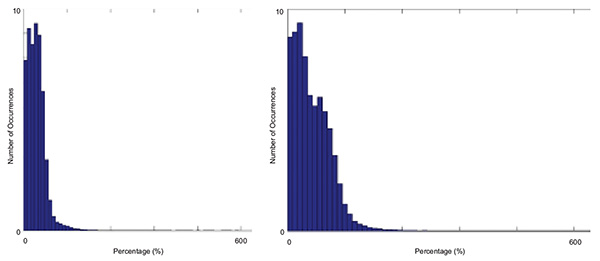

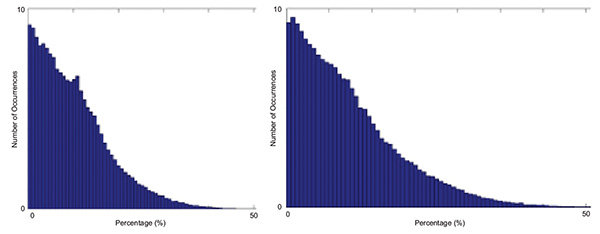

To validate our solution, we selected two images and tested them on two different systems using True Color. We created 3D LUTs for display based on the color primaries and white point. The first image [Fig. 3(a)] and the True Color application were loaded onto a Dell AIO Inspiron Series 5000 FHD desktop and enhanced using two boost settings – the medium and highest levels [Fig. 3(b)]. Using colorfulness, defined as the color volume in IPT color space, we created histograms of the enhanced images, illustrating the mean [Fig. 4(a)] and the 95% [Fig. 4(b)] increase of colorfulness vs. frequency of occurrence. Similar histograms were created for image gamut vs. occurrence [Fig. 5(a) and 5(b)]. The mean and 95% measurements of the image gamut and colorfulness are summarized in Fig. 6.

(a)

(a)

(b)

(b)

Fig. 3: (a) Original image. (b) Enhanced image using highest boost setting.

Fig. 4: (a) Mean and 95% increase in colorfulness for medium boost setting. (b) Mean and 95% increase in colorfullness for highest boost setting.

Fig. 5: (a) Mean and 95% image gamut increase for medium boost setting. (b) Mean and 95% image gamut increase for highest boost setting.

Fig. 6: Both the mean and 95% measurement data are shown for image gamut and colorfulness enhancement.

Measurement data show that 95% of the input-image data points are mapped to a new location, which expands by 7.40 and 17.97, respectively, for the highest boost setting. By using a similar setting, the color increases to 42.71 and 101.04, respectively. The True Color highest boost setting produces the best visually noticeable image enhancement. The new image features higher color saturation and a brighter background, as observed in the surrounding foliage. The barn in the enhanced image stands out, looking more vivid, as if it were being viewed in a brighter sunlight.

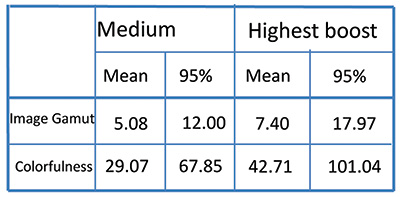

Similarly, we loaded the second system, a Dell Inspiron 15 7000, with the 3D LUTs and the True Color application. The image was tested using maximum skin-tone adjustment and warm-illumination setting; these images were measured using Minolta Model C210 U-Probe color-processing equipment with a large-measurement spot size. Four measurements points were taken as indicated in Fig. 7(a) and then plotted showing the change in magnitude and directional shift (Fig. 8).

As observed in Fig. 8, the four points move away from the white point toward higher saturation. The non-linear data transformation of the conversion equation impacts the magnitude and directional change of each point differently. The skin boost is set to warm while the background is brightened and adjusted for the user’s color-temperature environment. As a result, image Fig. 7(b) boosts to a warmer skin tone over a brighter gray background, appearing vivid and more colorful.

(a)

(a)

(b)

(b)

Fig. 7: (a) Original image with measurement locations. (b) Enhanced image using maximum skin tone and warm illumination.

Fig. 8: Optical data of the enhanced image using maximum skin tone and warm illumination settings.

Better Display Quality through Color

True Color improves front-of-screen performance by targeting the colorfulness of output images. The main purpose of this work on color improvement was the design of a robust system architecture at the system level, flexible to the complex tasks of color management (i.e., transform, correct, boost) and controlled by a graphical user interface for individual customization. Developers can access the pixel data using the SDK specification and True Color enabled Intel chipsets to build innovative applications that lead to the next level of display performance (e.g., color-accuracy adjustments, and new accessibility tools for color-blind users using look-up tables). Future development will focus on intermediate steps where the input image is sharpened and contrast enhanced so as to, along with color management, enable a superior front-of-screen experience.

References

1E. Kim, “Apple iPhone 6 Plus vs. Samsung Galaxy Note 4: Big-Screen Showdown,” pcmag.com (Sept. 19, 2014).

2P. Putman, “Digital In The Desert: The 2014 HPA Tech Retreat,” HDTV Expert.com (Feb 20, 2014).

3M. Chiappetta, “Technicolor Color Certification Program: Marketing hype or genuine consumer benefit?” PC World.com (Aug. 11, 2014).

4J. F. Schumacher, J. Van Derlofske, B. Stankiewicz, D. Lamb, and A. Lathrop, “PQM: A Quantitative Tool for Evaluating Decisions in Display Design,” Information Display (May/June 2013).

5G. Loveridge, “White Paper – High resolution screens alone don’t produce high quality mobile video,” Pixelwork.com (Aug 5, 2014).

6Press Release: “Z Microsystems Debuts Intelligent Display Series Real-Time Enhanced Video,” zmicro.com, (July 8, 2009).

7R. L. Heckaman and J. Sullivan, “Rendering Digital Cinema and Broadcast TV Content to Wide Gamut Display Media,” SID Digest Tech. Papers (2011).

8X. Yang, “Uniform Color Spaces based on CIECAM02 and IPT color Differences” (RIT Media Library, 2008).

9J. Sullivan, “Superior Digital Video Images through Multi-Dimensional Color Tables,” eeColor.com •

Stefan Peana leads display technology development for the Chief Technology Office at Dell. He can be reached at Stefan_Peana@Dell.com. Jim Sullivan is Chief Technology Officer at and founder of eeColor. He can be reached at Jim.Sullivan@entexpinc.com.